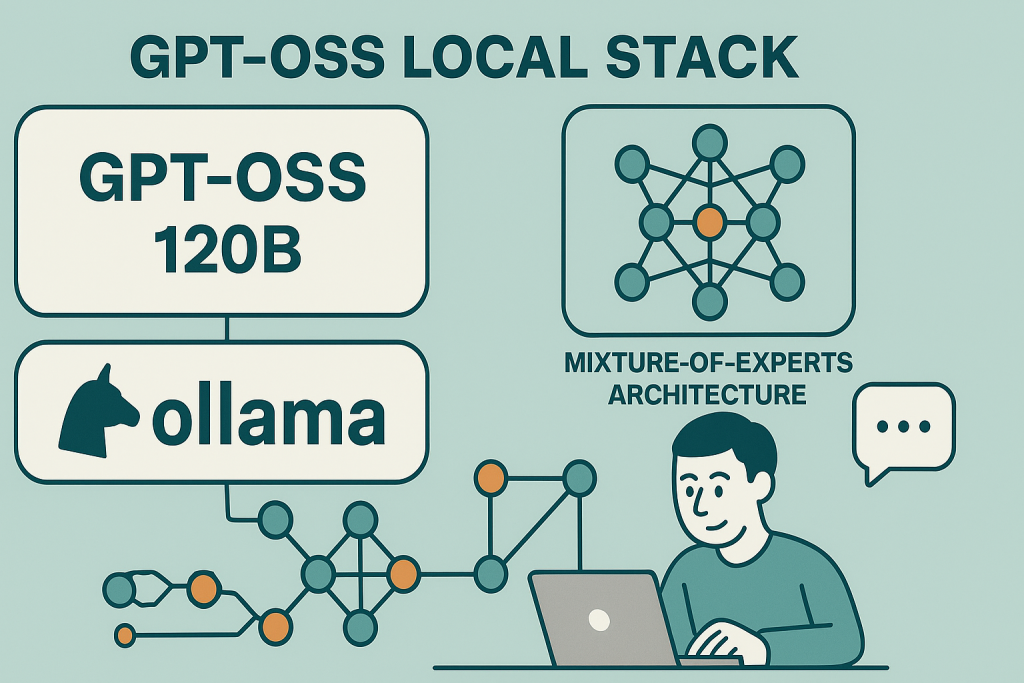

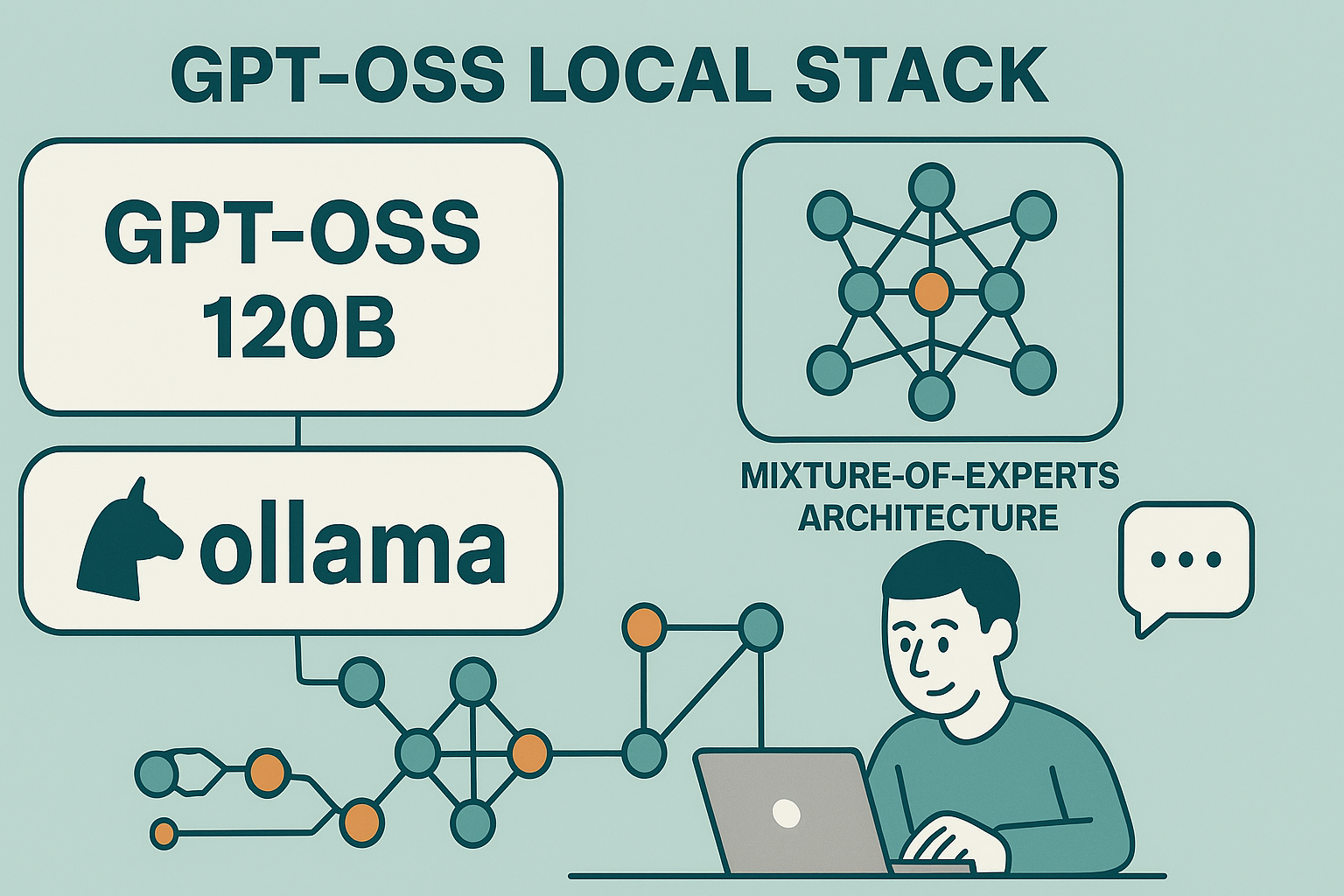

OpenAI has just dropped a seismic update in the AI world: the release of GitHub – openai/gpt-oss: gpt-oss-120b and gpt-oss-20b are two open-weight language models by OpenAI, its first open-weight models since GPT-2. This move marks a return to open development principles and unlocks powerful new possibilities for creators, developers, and researchers who want to run cutting-edge models locally—no cloud, no gatekeeping.

🚀 What Is GPT-OSS?

GPT-OSS comes in two flavors: How to run gpt-oss locally with Ollama

| Model | Parameters | Active Params/Token | VRAM Requirement | Ideal Use Case |

|---|---|---|---|---|

gpt-oss:20b | 21B | 3.6B | ≥16GB | Consumer laptops, Apple Silicon Macs |

gpt-oss:120b | 117B | 5.1B | ≥60GB | Multi-GPU workstations, enterprise setups |

These models use Mixture-of-Experts (MoE) architecture for efficient inference and are licensed under Apache 2.0, meaning you can use, modify, and monetize them freely.

🛠️ How to Deploy GPT-OSS Locally with Ollama

Ollama is the go-to platform for running GPT-OSS on your own hardware. Here’s a quick guide:

⚙️ Setup Steps

- Download for your OS: Download Ollama

- Supports macOS, Linux, and Windows 10+

🛠️ Pull the Model

bash

ollama pull gpt-oss:20b

# or

ollama pull gpt-oss:120b

💻 Run the Model

bash

ollama run gpt-oss:20b

This launches a chat interface using OpenAI’s Harmony format. Ollama exposes a Chat Completions-compatible API, so you can use the OpenAI SDK almost unchanged:

🔌 Local API Integration

python

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:11434/v1",

api_key="ollama" # Dummy key

)

response = client.chat.completions.create(

model="gpt-oss:20b",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain MXFP4 quantization."}

]

)

print(response.choices[0].message.content)

🧠 Advanced Features

Function Calling: You can define tools and let GPT-OSS invoke them.

Agents SDK Integration: Use LiteLLM or AI SDK to plug GPT-OSS into OpenAI’s Agents framework.

Responses API Workaround: Not natively supported, but you can proxy via Hugging Face’s [Responses.js.]

📰 Coverage Roundup: GPT-OSS in the News

Here are some key articles covering this release:

- TechRepublic: OpenAI Launches GPT-OSS

- VentureBeat: OpenAI Returns to Open Source Roots

- Ars Technica: OpenAI Releases First Open Models Since 2019

- OpenAI Blog: Introducing GPT-OSS

- GitHub Repo: GPT-OSS Models

🥜 Final Nut: Why This Matters for Creators

This release isn’t just a technical milestone—it’s a creative liberation.

- Privacy by Design: Run models offline, keeping sensitive data secure.

- Customization: Fine-tune models for satire, storytelling, education, or advocacy.

- No Vendor Lock-In: Build workflows without relying on proprietary APIs or cloud costs.

- Philosophical Shift: Aligns with values of transparency, autonomy, and democratized access.

Whether you’re crafting satirical scripts, building educational tools, or exploring digital rights, GPT-OSS + Ollama gives you the freedom to create on your own terms.

Comment below or Contact Us with any questions

- The $6B Fusion Pivot: The Trump Media–TAE Merger (and the Internet Rumors It Accidentally Ignited)

- DOE’s Genesis Mission: The Federal AI Grid Behind the Collaboration Hype

- Stanford’s AI Experts Predict 2026: From Evangelism to Evaluation

- “Trump’s U.S. Tech Force-Innovation or Bureaucracy in Disguise?”

- Drone Swarms, AI, and the March Toward Automated Control: A Warning for Humanity

Leave a Reply