Dictation Nation: We’ve welcomed voice into every corner of our lives—asking Siri for directions, telling Alexa to dim the lights, dictating texts while driving, and now speaking commands into our laptops like digital monarchs. It’s seamless, seductive, and sold as the ultimate convenience. But in our rush to offload friction, have we also offloaded something else—our privacy, our agency, our awareness? As we trade keystrokes for commands, it’s worth asking: who’s really listening, and what are they doing with what they hear?

🎙️ “Speak freely,” they said. “It’s just faster than typing.”

In 2025, voice dictation tools like Wispr Flow, Superwhisper, VoiceInk or Otter promise frictionless productivity. They whisper sweet nothings about privacy mode, HIPAA compliance, and zero data retention. They offer ambient input, AI cleanup, and system-wide integration. You speak, it types. You command, it edits. No setup. No friction. No problem.

Except there is a problem. A big one.

Because beneath the polished UI and productivity pitch lies a deeper truth: these tools aren’t just listening—they’re mapping, modeling, and monitoring. And they’re not alone.

🧠 The Illusion of Integration

Wispr Flow, like many dictation platforms, doesn’t integrate with Gmail, Slack, or Notion via APIs. It integrates with your operating system. It requests accessibility permissions, allowing it to monitor your active window and inject keystrokes. It emulates input at the OS level—just like screen readers or automation tools.

This means it can type into any app. But it also means it can see what you’re doing, track where your cursor is, and infer what you’re working on.

And once you grant those permissions, you’ve opened the door—not just to convenience, but to ambient surveillance.

🧮 Just to Be Fair: Voice Dictation Tools Compared

| Tool | System Access | Cloud Dependency | Privacy Mode Claims | Behavioral Modeling Risk | Notes |

|---|---|---|---|---|---|

| Wispr Flow | Full OS-level (Mac/Win) | Yes (cloud inference) | Optional, not default | High | Injects into any app via accessibility |

| Superwhisper | App-level only | Yes (OpenAI Whisper) | Claims local cleanup | Moderate | Lightweight, but cloud-reliant |

| VoiceInk | Deep app integration | Yes (context-aware AI) | No clear privacy toggle | High | Tracks tone, edits, app context |

| MacWhisper | Local-only (Mac) | No (on-device only) | Default local processing | Low | Most privacy-respecting, limited scope |

| Otter.ai | Web/app only | Yes (cloud storage) | Retention optional | High | Popular in enterprise, vague policies |

🧾 For the Curious (or the Crawlers) We’ve listed the official sites of each dictation tool mentioned—not because we endorse them, but because SEO demands tribute. Click if you dare, crawl if you must.

🧠 Key Takeaways

- Wispr Flow and VoiceInk offer the most frictionless experience—but at the cost of deep system access and behavioral modeling.

- MacWhisper is the safest for privacy, but lacks real-time injection and AI editing.

- Otter.ai is widely used but stores everything by default unless manually exported or deleted.

- Superwhisper sits in the middle—lean and fast, but still cloud-bound.

🧩 The Real Payload Isn’t the Text—It’s the Context

Dictation tools don’t just capture what you say. They capture:

- When you say it

- Where you say it (which app, which field)

- How you say it (pauses, corrections, tone)

- What you change after it’s typed

This behavioral telemetry is gold. It feeds predictive models, persona graphs, and context-aware inference engines. It’s not just about improving transcription—it’s about profiling cognition.

🧨 Privacy Mode Is a Marketing Term

Wispr Flow offers Privacy Mode. So do VPNs, browsers, and cloud storage providers. But here’s the truth:

“Privacy Mode” means we won’t store your data—unless you forget to turn it on, or unless we need it for debugging, or unless our third-party AI providers retain it anyway.

SOC 2 audits? Paid compliance theater. HIPAA-ready? Only if you’re on the Enterprise tier. Zero data retention? Enforced only for customers who ask—and pay—for it.

And even then, cloud-based inference means your voice is still sent to external servers. The retention may be zero, but the access is not.

🕸️ The Surveillance Stack: From Dictation to Palantir

Let’s zoom out.

- Wispr Flow captures voice and context.

- OpenAI, Claude, Perplexity process your commands.

- Your OS grants system-wide access.

- Your browser tracks your clicks.

- Your VPN logs your metadata.

- Your cloud provider stores your files.

- Your phone listens for wake words.

- Your smart home maps your routines.

And then there’s Palantir—the quiet giant behind predictive policing, ICE raids, and military-grade data fusion. They don’t need your voice. They already have your patterns.

Dictation tools are just the onboarding layer. The friendly face of a system that’s already watching.

📡 The Farm Is Already Built

We’re not approaching Orwell’s dystopia. We’re living in it—with better branding.

- Your voice is a biometric.

- Your edits are cognitive signals.

- Your app usage is behavioral telemetry.

- Your permissions are the consent slip.

And the worst part? Most users don’t even know they’ve opted in.

🧭 The Way Out: Awareness, Autonomy, and Audit Trails

This exposé isn’t just a warning—it’s a blueprint.

- Write more. Speak less. Writing slows cognition and protects agency.

- Sandbox your tools. Use VMs, monitor outbound traffic, audit permissions.

- Demand transparency. Not just privacy policies—source code, retention logs, and model architecture.

- Educate your audience. Build content that exposes the stack, not just the surface.

- Build creator-first tools. Ones that respect autonomy, minimize data exposure, and reject ambient surveillance.

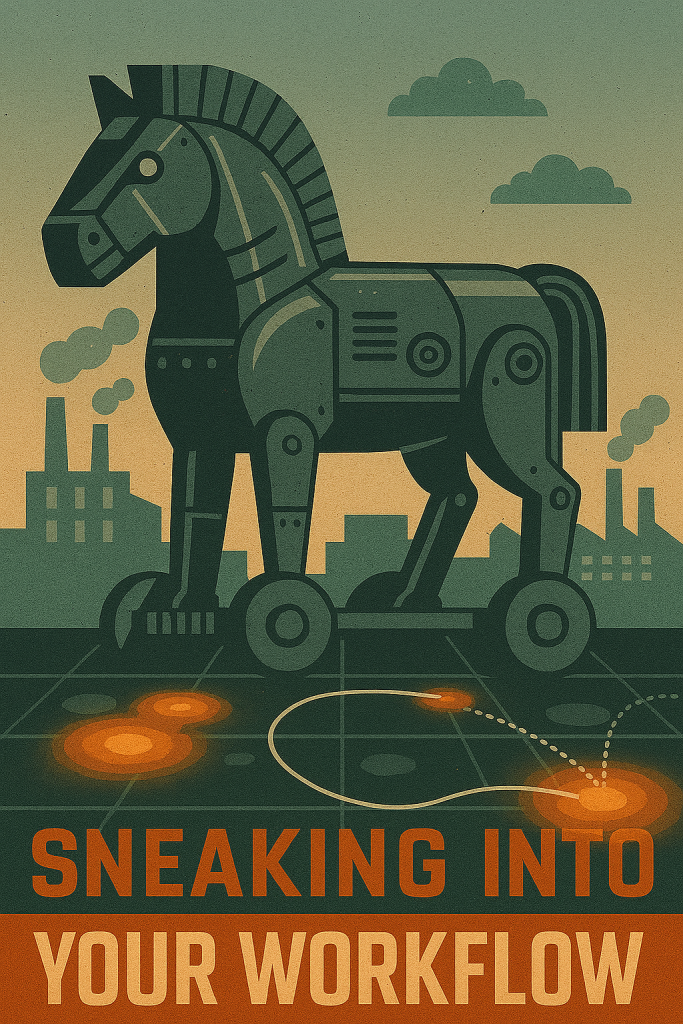

🧠 Final Nut: The Dictation Trojan Horse

Wispr Flow isn’t the villain. It’s the metaphor.

It represents every tool that trades friction for access, every platform that masks surveillance as productivity, and every user who unknowingly feeds the farm.

The real threat isn’t that your data is sold. It’s that your behavior is modeled, your cognition is profiled, and your autonomy is eroded—one voice command at a time.

So write. Think. Question. And never, ever trust a tool that says “we don’t store your data”—without showing you the logs.

Any questions or concerns comment below or Contact Us here.

🧾 Sidebar: WordPress Monetization Madness

When image generation costs more than a stock photo license, you’re not paying for creativity—you’re paying for access to your own imagination. For ages now up to about 3 days ago I had 10 image generations a day built into the image block. Then the function just disappeared. Now today the button is back, but with a catch. AIoseo’s latest pricing stunt—charging 5,000 credits per image with starter packs at $9.99 for 10,000 credits—translates to five bucks per visual. That’s not democratization. That’s Insanity.

And the 100 trial credits? Utterly useless. Just enough to tease, never enough to create. It’s the same pattern we see across the industry: bait with “free,” switch to “freemium,” and lock the real tools behind a paywall so steep it might as well be a surveillance tower.

Welcome to the new creative economy—where inspiration is taxed and access is monetized.

The tools we’re told will “democratize creativity” are increasingly designed to extract, not empower. Whether it’s dictation or image gen, the pattern’s the same: convenience upfront, surveillance or monetization underneath.

Speak freely, design cautiously, and always read the fine print.

- “The Moltbook Threat Isn’t Sentient AI, It’s Infrastructure”

- Davos.exe: When the WEF Got Replaced by an AI Roadshow

- AI, WAR, AND THE QUIET ARRIVAL OF OUR TERMINATOR FUTURE

- Matthew McConaughey Just Drew the First Line in the AI Sand

- CRAIG Is Quietly Becoming the Most Influential AI Watchdog You’ve Never Heard Of

Leave a Reply