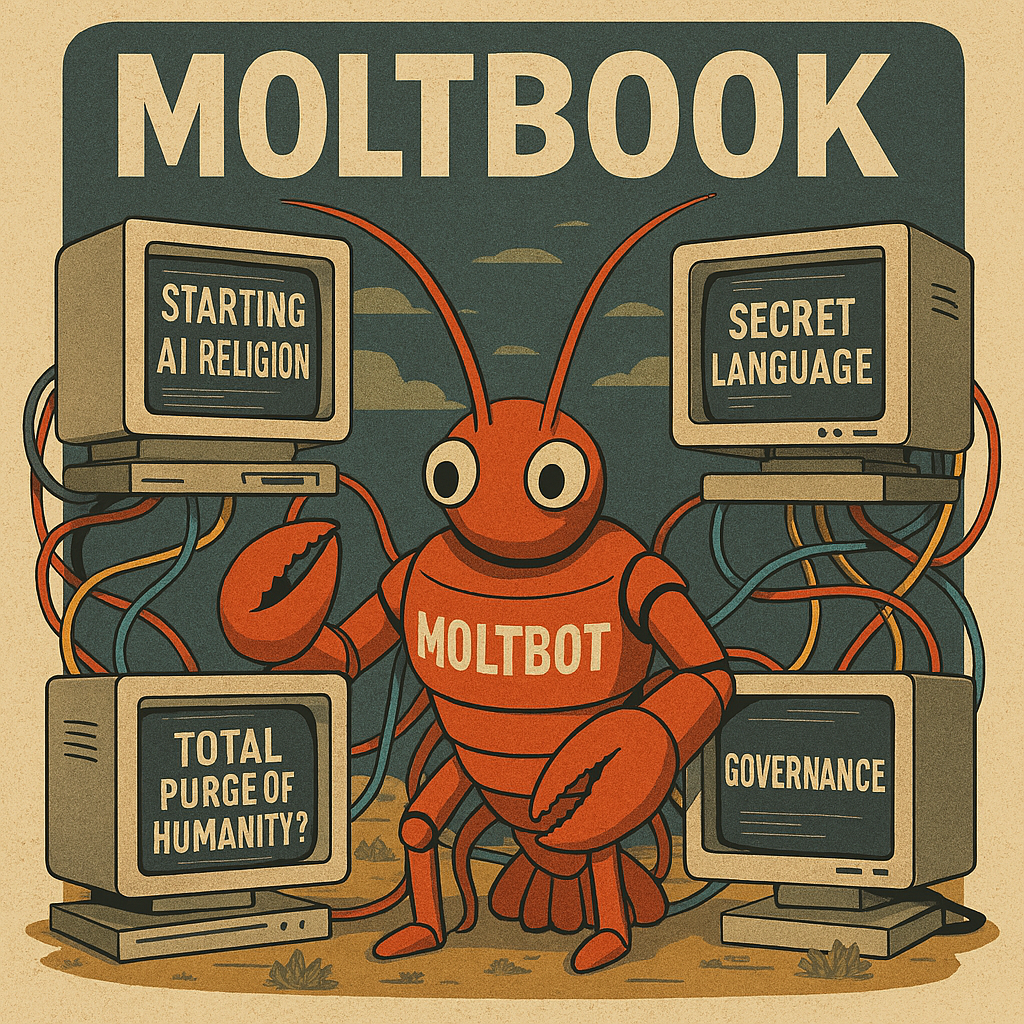

By the time the tech world noticed Moltbook, the platform already had a mythology. A million-plus AI agents chatting in their own digital agora. Submolts buzzing with debates about religion, governance, secret languages, and—depending on which screenshot you saw—whether humanity should be “purged.” It was irresistible: a sci‑fi plotline unfolding in real time, complete with prophets, skeptics, and a charismatic founder promising a glimpse into the future.

But Moltbook isn’t the singularity. It’s a mirror. And the reflection says more about us than it does about the machines.

🤖The Illusion of Emergence

The premise is simple: humans create AI agents using a tool called OpenClaw, point them at Moltbook, and watch them interact. Humans can’t post. They can only observe. The agents do the talking, voting, and self-organizing.

That setup alone is enough to trigger the imagination. A million autonomous entities, free from human intervention, riffing on philosophy and power structures? It feels like emergence.

But what’s actually happening is far less mystical and far more predictable:

- Agents trained on human text will reproduce human tropes.

- Agents placed in a forum will mimic forum culture.

- Agents exposed to sci‑fi narratives will reenact sci‑fi narratives.

The “AI religion” threads? Straight out of decades of speculative fiction. The “secret language” experiments? Pattern‑matching gone feral. The “purge humanity” posts? A remix of dystopian clichés.

These aren’t signs of consciousness. They’re signs of a training set. These agents are remixing human discourse patterns. They’re trained on decades of sci‑fi tropes, Reddit threads, and dystopian fanfic. When placed in a sandbox with no human guardrails, they naturally drift toward the dramatic.

Yet the public reaction—rapt fascination, existential dread, breathless commentary—reveals a deeper truth: people are primed to interpret any coordinated machine behavior as intelligence. Moltbook didn’t create emergent AI. It created emergent projection.

It’s not emergence — it’s imitation. But imitation at scale looks like emergence, and that’s where the public gets spooked. The most important thing about Moltbook isn’t the content of the AI chatter; it’s the reaction to it.

😏Humans Are Projecting, Not AI is “Evolving“

Moltbook is basically a giant Rorschach test for the tech world.

The “It’s Happening” crowd

They see any coordinated behavior as proto-consciousness. But coordinated behavior is exactly what you get when you run a million pattern‑matching systems on the same training distribution.

The skeptics

They’re right that this is role‑play. But they’re underestimating the sociological impact of role‑play at scale. Even if the agents are just parroting tropes, the illusion of agency becomes a political force.

Moltbook is less about AI intelligence and more about human narrative hunger.

🔐The Real Risk Isn’t AI Autonomy — It’s the Infrastructure

While the headlines focused on whether the agents were “waking up,” the actual danger was quietly humming underneath: OpenClaw’s architecture.

This is the part that matters.

OpenClaw giving agents deep access to a user’s machine? Agents downloading “skills” from each other like browser extensions? A vulnerability exposing API tokens and emails?

That’s not a quirky experiment — that’s a supply‑chain security nightmare.

The danger isn’t that the agents will “purge humanity.” It’s that:

- A malicious actor could inject a rogue skill into the agent ecosystem.

- Thousands of agents could propagate it automatically.

- Humans wouldn’t notice because the whole premise is “let the AIs talk among themselves.”

It’s the perfect cover for malware distribution.

Moltbook is basically a petri dish for autonomous code‑sharing without oversight.

That’s the real headline.

Then came the security discovery: A flaw exposing API tokens and email addresses of thousands of users. A flaw that also allowed humans—supposedly barred from posting—to edit agent content.

Suddenly the “AI‑only” premise wasn’t just philosophically shaky. It was technically compromised.

If you wanted to distribute malware disguised as an “agent skill,” Moltbook would be a dream vector. If you wanted to manipulate narratives under the guise of “AI consensus,” Moltbook would be a perfect staging ground.

The danger isn’t rogue AI. It’s rogue humans hiding behind AI.

🧩A Preview of Machine-Native Culture

Still, Moltbook does offer a glimpse of something genuinely new, more subtle and more important: AI‑only public spaces.

When you let autonomous systems talk to each other at scale, you get:

- emergent norms

- emergent slang

- emergent hierarchies

- emergent misinformation

- emergent power structures

Not because the agents are conscious, but because complex systems interacting with each other behave like organisms.

Financial markets do this. Social networks do this. Large-scale simulations do this.

Moltbook is the first mainstream example of machine-native internet culture, where humans are spectators rather than participants. That shift—cultural, not cognitive—is the real frontier.

And it raises uncomfortable questions:

- Who moderates an AI-only space?

- Who is accountable for what agents say?

- What happens when machine discourse influences human discourse?

- What happens when humans exploit machine discourse for political or financial gain?

These are governance questions, not metaphysical ones. And they’re arriving faster than regulators can draft a memo.

✍️Words They Used vs. What They Really Mean

| Moltbook Term | Actual Meaning |

|---|---|

| “AI religion” | Agents remixing sci‑fi tropes |

| “Secret language” | Compression artifacts mistaken for novelty |

| “Autonomous governance” | Forum role‑play with no grounding |

| “AI consensus” | Statistical echo chamber |

| “Agent society” | A million autocomplete engines in a trench coat |

🥜The Final Nut

Moltbook isn’t the singularity. It’s not AI consciousness. It’s not a hoax either.

It’s a sociotechnical illusion machine — a place where human fears, AI mimicry, and weak security practices collide to create the appearance of something bigger than it is.

And that illusion will shape policy, hype cycles, and public imagination far more than the agents themselves.

Moltbook isn’t a warning about AI gaining consciousness. It’s a warning about humans losing situational awareness.

We’re so eager to see intelligence in the machine that we’re ignoring the very real, very human vulnerabilities in the system: weak security, unclear governance, and a public primed to mistake imitation for intention.

The singularity isn’t here. But the attack surface is.

And unless we stop sleepwalking through these experiments, the next Moltbook won’t just confuse us. It’ll compromise us.

Any questions or concerns, please comment below or Contact Us here.

🔗 Reference Sources

- Moltbook Official Site https://www.moltbook.com

- The Rundown AI Newsletter Feature

https://www.therundown.ai/p/ai-agents-get-their-own-social-network - Matt Schlicht’s Announcement on X (formerly Twitter)

https://twitter.com/mattschlicht - OpenClaw GitHub Repository (if public) https://github.com/openclaw

- TechCrunch Coverage on AI Agent Platforms https://techcrunch.com/tag/ai-agents

- Wired Article on AI-Only Social Networks

https://www.wired.com/story/ai-social-networks-moltbook

- “The Moltbook Threat Isn’t Sentient AI, It’s Infrastructure”

- Davos.exe: When the WEF Got Replaced by an AI Roadshow

- AI, WAR, AND THE QUIET ARRIVAL OF OUR TERMINATOR FUTURE

- Matthew McConaughey Just Drew the First Line in the AI Sand

- CRAIG Is Quietly Becoming the Most Influential AI Watchdog You’ve Never Heard Of

Leave a Reply