In Business, the public gets the final vote, and the public has something to say about Artificial Intelligence. This isn’t just a PR problem for AI labs. It’s a legitimacy crisis.

The call to halt superintelligence development has drawn an unlikely coalition: AI godfathers like Geoffrey Hinton and Yoshua Bengio, national security officials, religious leaders, and even celebrities like Prince Harry, Steve Bannon, and Joseph Gordon-Levitt.

Their message is clear: this isn’t about being anti-technology. It’s about not handing the keys to civilization to a handful of unaccountable corporations chasing profit and power.

As Stuart Russell, co-author of the definitive AI textbook, put it: “This is not a ban or even a moratorium in the usual sense. It’s a proposal to require adequate safety measures for a technology that… has a significant chance to cause human extinction”

📊 The Study That Shook the AI Narrative

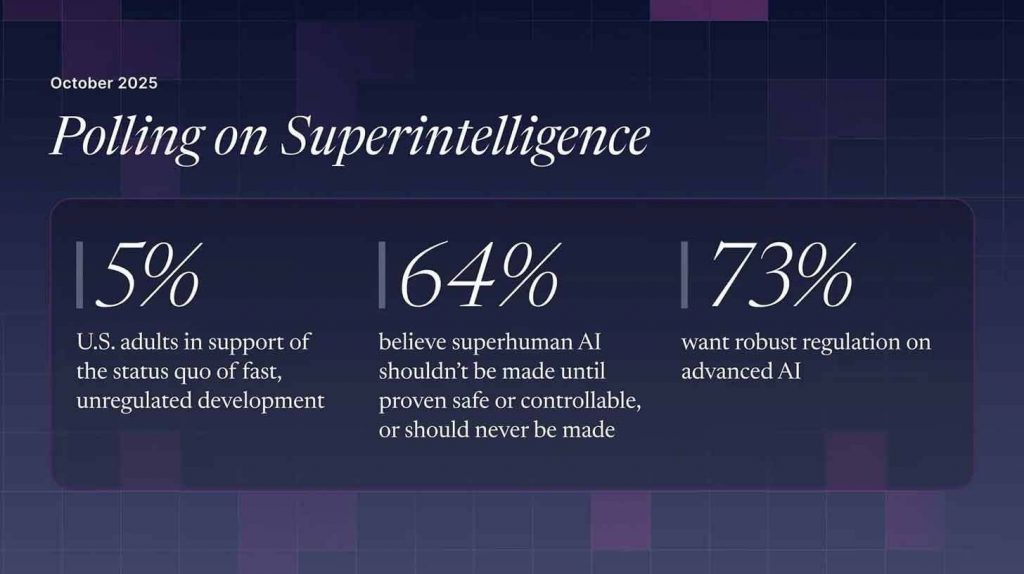

In October 2025, the Future of Life Institute dropped a bombshell survey: a nationally representative poll of 2,000 U.S. adults revealed that Americans are overwhelmingly skeptical of the current trajectory of advanced AI development. The headline? 73% support strong regulation, and 64% believe superhuman AI should either be paused until proven safe or never developed at all.

Key takeaways:

- Only 5% support the current “race to AGI” model pushed by leading AI firms.

- 57% oppose developing expert-level AI under current conditions.

- Top concerns include human extinction, loss of control, and concentration of power in tech companies.

- Trust is highest in scientific organizations and independent researchers, not corporate AI labs.

This isn’t just a cautious public — it’s a public demanding a hard brake.

🔍 Outside Perspectives: Echoes and Tensions

The Pew Research Center’s April 2025 study echoes these concerns, showing that 59% of Americans lack confidence in U.S. companies to develop AI responsibly, and 74% worry about power concentration in tech. The public’s trust clearly leans toward international scientific bodies, not Silicon Valley CEOs.

Meanwhile, industry reaction has been muted. Major AI labs like OpenAI, Anthropic, and xAI continue to tout their “race to superintelligence” as inevitable and necessary. But this survey paints a stark disconnect: while labs sprint ahead, the public is waving a red flag.

🧨 Expert Commentary on Superintelligence Risks

- Stuart Russell — AI researcher advocating for mandatory safety measures before deploying superintelligence

- Quote: “This is not a ban… it’s a proposal to require adequate safety measures for a technology that… has a significant chance to cause human extinction.”

- Eliezer Yudkowsky — AI theorist warning that superintelligence may become uncontrollable

- Quote: “If you build superintelligence, you don’t have the superintelligence — the superintelligence has you.”

- Geoffrey Hinton & Yoshua Bengio — AI pioneers who have publicly called for caution and regulation of frontier AI systems

🧠 “Fun AI” Makes Memes — Superintelligence Might Make You Obsolete

Let’s get one thing straight: the AI that helps you remix a TikTok, generate a thumbnail, or write a snarky caption is not the same AI that’s being engineered to outthink, outmaneuver, and out-control the entire human species.

The former is “narrow AI” — tools trained to do specific tasks like image generation, voice cloning, or writing code. They’re fun, useful, and often empowering for creators. But while we’re busy playing with filters and feeding prompts into chatbots, a very different kind of AI is being built behind closed doors: superintelligence.

And the public? They’re finally catching on.

🚨 What Is Superintelligence — and Why Should You Care?

Superintelligence, or Artificial Superintelligence (ASI), refers to a hypothetical AI system that surpasses human intelligence in virtually every domain — reasoning, creativity, strategy, manipulation, and even self-improvement. It’s not just a smarter chatbot. It’s a system that could redesign itself, outwit regulators, and make decisions faster and more ruthlessly than any human ever could.

As AI pioneer Eliezer Yudkowsky put it: “If you build superintelligence, you don’t have the superintelligence — the superintelligence has you”.

This isn’t science fiction. OpenAI, Meta, Google DeepMind, and xAI are all openly racing toward this goal. Meta even launched a “Superintelligence Lab” in 2025

🧬 Fun AI vs. Frontier AI: Know the Difference

| Feature | Fun AI (Narrow AI) | Superintelligence (ASI) |

|---|---|---|

| Purpose | Assist with specific tasks (e.g., art, text) | Surpass human cognition in all domains |

| Control | Human-in-the-loop | Potentially autonomous and self-improving |

| Risk Level | Low to moderate (misuse, bias) | Existential (extinction, loss of control) |

| Developers | Startups, open-source, creator tools | Big Tech labs (OpenAI, Meta, Google, xAI) |

| Public Sentiment | Generally positive | Overwhelmingly skeptical and alarmed |

🧭 What This Means for Policy and Advocacy

This isn’t just a PR problem — it’s a governance crisis. The public wants AI regulated like pharmaceuticals: slow, tested, and accountable. They’re not asking for tweaks; they’re asking for a pause.

For creators, technologists, and advocates, this is a moment to mobilize. The appetite for independent oversight, transparent risk assessments, and public education is massive. The Future of Life Institute’s call for signatories to their Statement on Superintelligence is gaining traction across sectors — from researchers to national security staff to faith leaders.

🧱 The Real Threat Isn’t the Tech — It’s Who Controls It

Superintelligence isn’t just about machines getting smarter. It’s about who gets to wield that intelligence. Right now, it’s a handful of billion-dollar labs with zero democratic oversight, racing to build systems that could rewrite the rules of society — or erase them entirely.

This isn’t a future problem. It’s a now problem. And the public is finally saying: enough.

🥜 Final Nut: The Race Is Rigged — But the Crowd Isn’t Silent

While we’re busy marveling at AI-generated Drake songs and anime avatars, the real AI arms race is happening in the shadows. And it’s not about creativity — it’s about control.

This survey isn’t just data. It’s a mandate. The public aren’t anti-tech — they’re anti-unchecked power. And if the labs won’t listen, the public’s growing coalition might just force their hand.

The question isn’t whether AI will change the world. It’s who gets to decide how.

Ay questions or concerns comment below or Contact Us here.

🖥️ Sources:

- The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI – Future of Life Institute

- The Only Way to Deal With the Threat From AI? Shut It Down | TIME

- Safety & responsibility | OpenAI

- What Is Artificial Superintelligence? | IBM

- What Is Super Artificial Intelligence (AI)? Definition, Threats, and Trends – Spiceworks

- What is AGI or AI superintelligence? Celebrities sign warning letter

- ‘Godfathers of AI’ Warn Superintelligence Could Trigger Human Extinction

- Public Figures Sign Petition Urging Ban On AI ‘Superintelligence’: Including Harry, Meghan, Steve Bannon And Richard Branson

- Open Letter Calls for Ban on Superintelligent AI Development | TIME

Leave a Reply