The Real Exploit, Defining the Hook:

The Real Exploit is on the Public Ignorance of the real data traders. Let’s start with the most common scary catch phrase: “Zero-Day Exploit“

🕳️ What Is a Zero-Day Exploit?

A zero-day exploit refers to a vulnerability in software, hardware, or firmware that is:

- Unknown to the vendor or developer

- Actively exploited by attackers

- Has no official patch or fix available yet

The term “zero-day” comes from the fact that developers have had zero days to fix the flaw—because they didn’t even know it existed.

🔓 Why Are They So Dangerous?

- No defense: Since the vulnerability is unknown, antivirus tools, firewalls, and intrusion detection systems often fail to catch it.

- High value: Governments, cybercriminals, and even corporations pay top dollar for zero-days on the black market.

- Silent breach: Attackers can infiltrate systems, steal data, or install malware without triggering alarms.

🧠 Real-World Example

In our recent Qwiet AI newsletter, there was mention of:

- WinRAR Zero-Day (CVE-2025-8088): A path traversal flaw actively exploited before a patch was released.

- CyberArk & HashiCorp Vault Exploits: Chained zero-days enabling remote code execution and MFA bypass.

These are a couple of textbook cases—attackers found the flaws, used them in the wild, and only later did the vendors scramble to patch.

🛡️ How Are Zero-Days Discovered?

- White-hat researchers: Ethical hackers report them to vendors for responsible disclosure.

- Black-hat hackers: Sell them to the highest bidder or use them for espionage, ransomware, or sabotage.

- Bug bounty programs: Offer rewards for early detection before exploitation occurs.

However, The Really Real Exploit Is Masking Data Theft in Plain Sight as Security

🎭 Act I: The Spectacle of Fear

Every month, inboxes light up with alerts about “actively exploited zero-days,” “critical RCEs,” and spyware with names like Devils Tongue or Dragon’s Breath. These aren’t just technical disclosures—they’re psychological operations. The naming conventions alone evoke medieval dread, designed to trigger urgency and compliance.

“Zero-day” used to mean a vulnerability discovered before a patch existed. Now it’s a marketing term—used even after the fix is out—to dramatize the threat and justify the sale.

This fear-based framing isn’t just about awareness—it’s about conditioning. It primes users to accept invasive security measures, buy redundant tools, and trade autonomy for the illusion of safety.

🛡️ Act II: The Illusion of Protection

Enter the parade of solutions: VPNs that promise anonymity but funnel traffic through centralized servers. Antivirus suites that scan your files while uploading telemetry. “Secure” browsers that track your clicks in the name of optimization.

The cybersecurity industry thrives on this tension:

- Fear of breach → Purchase of protection

- Purchase of protection → Consent to surveillance

- Consent to surveillance → Data monetization

And the kicker? The user never sees a dime from the data they generate.

As Privacy International points out, our devices and infrastructure are now designed for data exploitation. Surveillance capitalism reduces people to behavioral datasets, and the systems that classify us are often more trusted than our own testimony.

🔍 Act III: The Exploit Behind the Exploit

The true zero-day isn’t in your firmware—it’s in your psychology. It’s the engineered belief that safety requires surrender. That privacy is negotiable. That your digital footprint is a fair trade for “peace of mind.”

Meanwhile, corporations collect, analyze, and sell your data:

- Without transparency

- Without consent

- Without profit-sharing

According to Brookings, 79% of consumers want compensation when their data is shared. Yet the legal frameworks around data ownership remain murky. Treating personal data as property may sound empowering, but it risks commodifying identity and inducing people to trade away privacy for pennies.

The real exploit? DTA Theft—Data, Trust, and Agency—stolen in broad daylight by platforms that profit from your digital life while denying you ownership or dividends.

🧩 Act IV: Who Owns Your Data?

Let’s get philosophical. If your data is generated by you, shouldn’t you own it? Not so fast.

As Squaring the Net explains, data ownership is a tangled web of legal rights, responsibilities, and regulatory gaps. GDPR and CCPA offer some protections, but enforcement is patchy, and corporations often sidestep accountability through vague consent forms and buried clauses.

Your name, your location, your habits—they’re all part of your identity. But they’re also part of someone else’s business model. And once collected, they’re often repackaged, sold, and weaponized against you in ways you’ll never see.

💸 Act V: The Monetization Machine

The lifecycle of stolen or harvested data is disturbingly efficient:

- Collected via apps, trackers, or breaches

- Sold on dark web marketplaces or to advertisers

- Used to profile, predict, and manipulate behavior

Even legitimate companies exploit this cycle. As Forbes notes, data sharing between brands and retailers can boost profits—but rarely includes the consumer in the revenue loop. The idea of a “data dividend” has been floated by lawmakers, but implementation remains elusive.

Meanwhile, the RAND Corporation confirms that cybercriminals and state-sponsored actors monetize stolen data through espionage, extortion, and resale—often with more agility than the companies that claim to protect it.

But here’s the twist: you don’t need to be hacked for your data to be sold. Even if you use VPNs, encrypted messaging, and privacy browsers, your data is still collected, aggregated, and traded—legally—by the very platforms you trust.

🏢 The Corporate Data Trade

Major corporations operate vast data brokerage networks:

- Meta (Facebook, Instagram): Tracks user behavior across apps and websites—even if you don’t have an account.

- Google: Collects search history, location data, voice recordings, and app usage across Android and Chrome ecosystems.

- Amazon: Uses purchase history, Alexa voice data, and even Ring camera footage to build consumer profiles.

- Oracle, Acxiom, Experian: These data brokers buy and sell consumer data to advertisers, insurers, and political campaigns.

According to Privacy International, these companies often operate in legal gray zones, using vague consent forms and bundled permissions to justify mass data extraction.

Even apps that claim to be “secure” or “private” often include third-party SDKs that siphon data to advertisers. As Consumer Reports notes, data brokers can infer sensitive traits—like income, health status, or political leanings—without ever asking you directly.

🧠 The Illusion of Control

Security tools may encrypt your traffic or block trackers, but they can’t stop:

- In-app telemetry

- Behavioral analytics

- Cross-device fingerprinting

- Consent laundering via Terms of Service

This means your data is traded on the information highway whether you like it or not. And unlike stolen data, this trade is legal, profitable, and invisible.

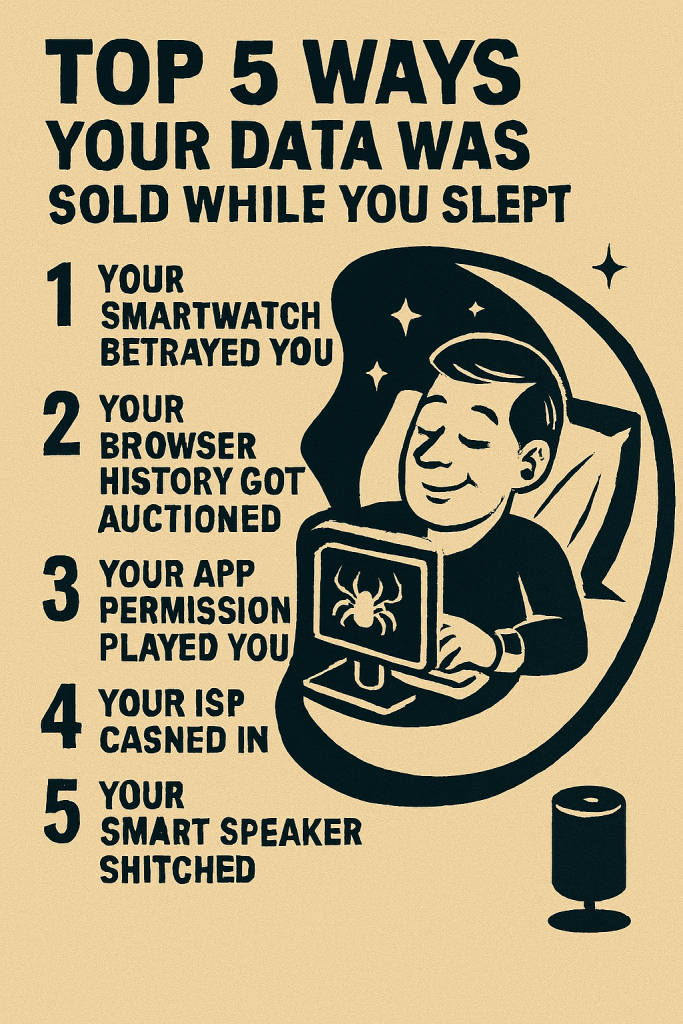

😴💰 Top 5 Ways Your Data Was Sold While You Slept

While you were dreaming of encrypted utopias, your digital shadow was hard at work making someone else rich. Here’s how:

1. Your Smartwatch Betrayed You

That sleep tracker didn’t just log your REM cycles—it pinged third-party servers with biometric data, location history, and app usage. Advertisers now know when you’re most vulnerable to impulse buys.

2. Your Browser History Got Auctioned

Even in Incognito mode, your DNS requests and device fingerprint were quietly bundled and sold to data brokers. Someone out there knows you searched “how to delete cookies” at 2:14 AM.

3. Your App Permissions Played You

That meditation app you downloaded for “peace of mind”? It shared your device ID, microphone access logs, and mood tags with ad networks. Serenity sold separately.

4. Your ISP Cashed In

Your internet provider logged every site you visited, then sold anonymized “behavioral insights” to marketers. Translation: they monetized your midnight doomscrolling.

5. Your Smart Speaker Snitched

Even if you didn’t say “Hey Alexa,” passive listening captured ambient audio cues. Those were parsed for emotional tone and product mentions—then fed into predictive ad models.

🌰 The Final Nut Critique and Call to Action

So let’s recap:

- You’re bombarded with fear-based alerts about “zero-days” that are already patched.

- You’re sold protection tools that collect more data than the threats they claim to block.

- Your personal information is mined, monetized, and manipulated—without compensation.

- And the legal system still debates whether you “own” your own identity.

This isn’t cybersecurity. It’s Data Theater™—a high-budget production where the audience pays for the ticket, the popcorn, and the surveillance.

🔥 Call to Action

Let’s flip the script.

- Demand transparency: Every platform should disclose how your data is used, sold, and valued.

- Push for profit-sharing: If your data generates revenue, you deserve a cut.

- Support data ownership legislation: Real rights, not just opt-out buttons.

- Build and use tools that respect agency: Decentralized, open-source, user-first.

And most importantly: Let’s stop calling it “security” when it’s really data extraction and start paying attention to The Real Exploit that infringes upon our privacy and ownership rights while profiting at our expense in the name of “security”.

Feel free to leave a comment below or Contact Us with any questions & concerns.

The Real Exploit Sources for Further Discussion:

🧠 Legal & Regulatory Analysis

- Gibson Dunn’s 2025 U.S. Cybersecurity and Data Privacy Review — Covers federal/state legislation, enforcement trends, and biometric data laws

- Squaring the Net — Offers nuanced takes on data ownership, consent, and EU digital rights frameworks (especially post-GDPR)

🔍 Technical & Analytical Depth

- Cambridge University Press – Data Analytics for Cybersecurity — Breaks down how log data, payloads, and telemetry are used to profile users and detect threats

- Intervalle Technologies – Cybersecurity and Data Privacy Best Practices — A digestible overview of evolving threats and the illusion of control in consumer-grade tools

🎭 Philosophical & Cultural Critique

- Shoshana Zuboff’s The Age of Surveillance Capitalism — A foundational text on how behavioral data is weaponized for profit and control

- Bruce Schneier’s blog and books — Especially Data and Goliath, which explores how governments and corporations exploit data asymmetries

🧩 Policy & Advocacy

- Privacy International — Investigative reports on data brokers, consent laundering, and surveillance infrastructure

- Consumer Reports Digital Lab — Research on inferred traits, app permissions, and deceptive UX patterns

- “The Moltbook Threat Isn’t Sentient AI, It’s Infrastructure”

- Davos.exe: When the WEF Got Replaced by an AI Roadshow

- AI, WAR, AND THE QUIET ARRIVAL OF OUR TERMINATOR FUTURE

- Matthew McConaughey Just Drew the First Line in the AI Sand

- CRAIG Is Quietly Becoming the Most Influential AI Watchdog You’ve Never Heard Of

Leave a Reply