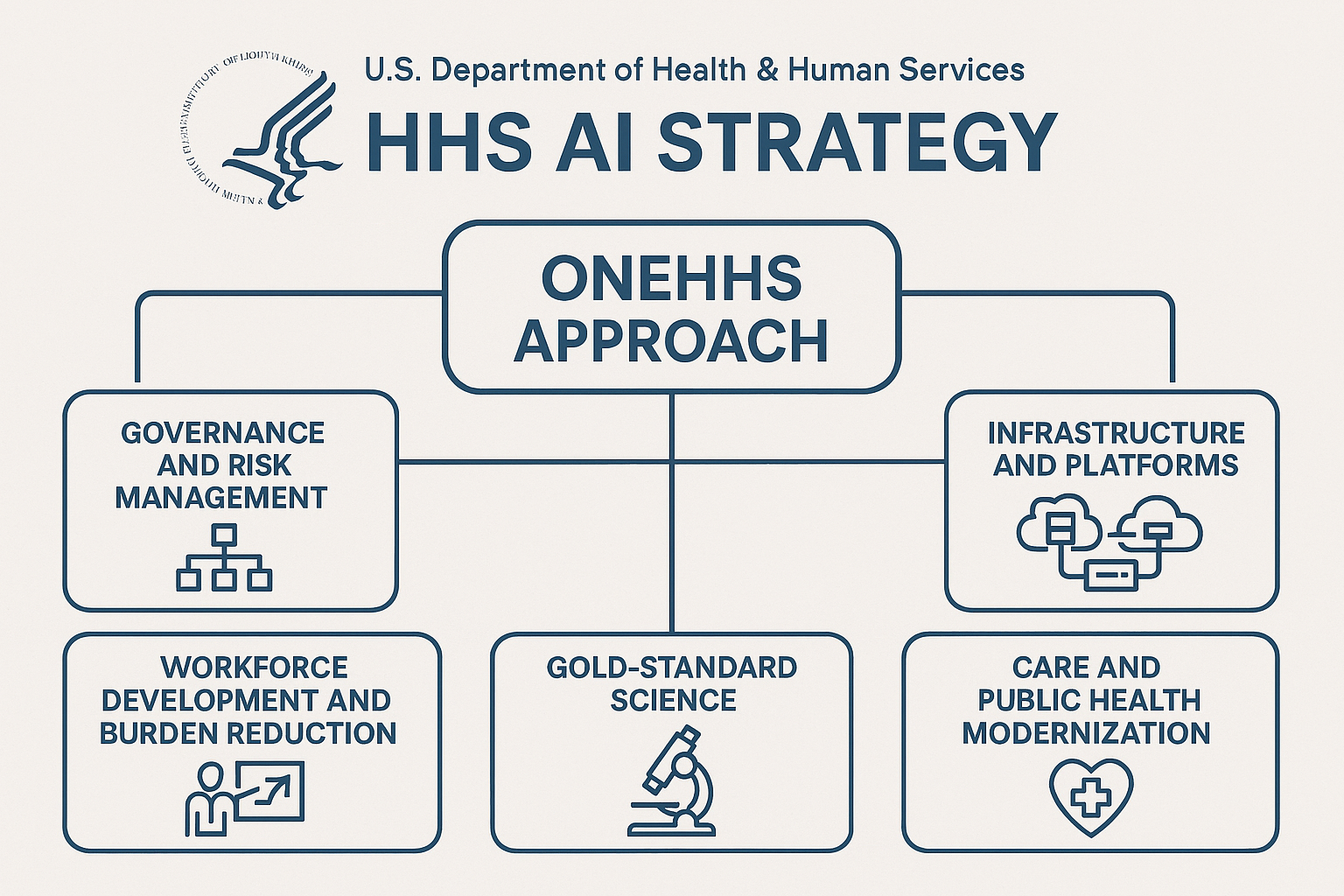

The U.S. Department of Health and Human Services has launched a department-wide AI Strategy centered on five pillars—governance, infrastructure, workforce, “gold-standard” science, and care/public health modernization—anchored by a “OneHHS” approach to unify data, compute, and models across its divisions. The official announcement positions AI as a catalyst for internal efficiency first, with future collaboration with private-sector stakeholders, and features strong endorsements from the department’s AI leadership and congressional AI champions.

🏛️Official HHS announcement and primary details

The Department of Health and Human Services (HHS) officially unveiled its AI Strategy on December 4, 2025, framing it as a “OneHHS” approach to unify AI infrastructure and practices across divisions like CDC, CMS, FDA, and NIH. The press release highlights five pillars: governance and risk management; infrastructure and platforms; workforce development and burden reduction; gold-standard science; and care and public health modernization. The announcement emphasizes internal efficiency first, with future engagement of private-sector stakeholders, and features comments from Deputy Secretary Jim O’Neill and Acting Chief AI Officer Clark Minor, alongside supportive statements from congressional AI leaders.

Coverage from Nextgov/FCW reinforces the five-pillar structure and the “OneHHS AI-integrated Commons” concept to rapidly develop, test, and deploy AI across environments, with AI impact assessments, independent reviews, inventories of use cases, and risk-proportionate controls to build public trust. It also notes data-sharing governance and efforts to standardize policies on data ownership and curation for machine legibility.

ExecutiveGov summarizes the pillars and adds detail on standardized minimum risk practices for high-impact AI and the plan to develop shared computing, data resources, testbeds, and models under the OneHHS AI-integrated Commons. It stresses workforce empowerment and rapid innovation as the strategy’s core objectives.

Healthcare Dive describes the initial focus on internal efficiency, the creation of a governance board, employee training at all levels, collaboration with the private sector, and identification of priority health conditions for AI tools. It also notes metrics HHS cites for outcomes, such as reduced hospital readmissions, lower sepsis mortality, fewer unnecessary ED revisits, and improved maternal and infant outcomes.

Federal News Network (AP) situates the strategy within the broader Trump administration push to accelerate federal AI adoption and highlights the “try-first” culture and department-wide ChatGPT access, while raising questions about how sensitive health information will be protected. Experts quoted caution that speed should not compromise rigor and risk management, pointing to gaps in detail on implementation and governance specifics.

🔍Context, timeline, and related moves

Politico previously reported RFK Jr., as HHS Secretary, telling Congress in May 2025 that HHS was already “aggressively” implementing AI, recruiting tech talent from Palantir, Booz Allen, and Andreessen Horowitz, and exploring ways AI could shorten clinical trials—positioning the December strategy as a formalization and expansion of an active agenda. HealthLeaders echoed those remarks, framing the “do more with less” AI posture across operations and data managementHealthLeaders.

GovInfoSecurity notes this strategy follows a significant departmental overhaul under Secretary Robert F. Kennedy Jr., with headcount reductions and a stated intent to break down silos via OneHHS. It cites 271 active or planned AI implementations in FY2024 and an estimated 70% increase in new use cases in FY2025, plus an AI governance board that meets at least twice per year and the department-wide availability of ChatGPT earlier in the year.

📑Media commentary and reactions

- Operational efficiency vs. data risk: Federal News Network (AP) underscores enthusiasm for modernization but flags unresolved questions about protecting highly sensitive health data, with experts urging rigorous standards—especially given leadership controversies and prior criticisms of data sharing practices. The piece stresses that ambition (centralized infrastructure, rapid deployment, AI-enabled workforce) must be matched with trustworthy governance and transparency.

- Governance mechanics and specificity: Healthcare Dive and GovInfoSecurity describe governance boards, risk protocols for “high-impact AI,” inventories, and monitoring, but note limited public detail on the “how”—fueling calls for clearer playbooks, auditability, and public reporting norms beyond general metric examples.

- Political signaling and trust climate: USA Today’s broader reporting on RFK Jr.’s public health messaging posture suggests a contested trust environment around HHS communications under current leadership, which may shape public acceptance of AI-enabled health initiatives—even if technically sound.

- Industry alignment: ExecutiveGov and Nextgov/FCW emphasize alignment with White House AI directives and OMB guidance, and the push toward open-weight/open-source models in safe capacities. This resonates with federal tech adoption patterns but invites scrutiny on reproducibility standards and inter-agency data governance, especially in health contexts

Media coverage paints a picture of a bold, internally focused foundation: the creation of an AI governance board, risk-proportionate controls, impact assessments, centralized resources, and workforce training to reduce administrative burden. Reporting highlights the OneHHS AI-integrated Commons designed to accelerate development and deployment across varied environments, and the intent to use open-source/open-weight models in safe capacities—paired with reproducible pipelines for validation in research contexts.

📝Key elements to watch

- OneHHS AI-integrated Commons: Shared compute, data, testbeds, and models to speed cross-division AI, raising both collaboration benefits and data governance/stewardship challenges.

- Risk-proportionate controls and oversight: Impact assessments, independent reviews, inventories, governance boards—solid scaffolding, but effectiveness depends on enforcement, transparency, and external scrutiny.

- Workforce enablement: Training pathways, approved tool repositories, prompt libraries, and secure deployment pipelines, which can reduce administrative burdens while requiring strong guardrails to avoid model misuse or overreliance.

- Research reproducibility: Centralized AI R&D approaches, benchmarks, reproducible pipelines, and safe use of open models—promising for science acceleration if paired with robust validation and bias mitigation.

- Public health and clinical support: Diagnostic and preventive analytics with monitoring, positioned as augmentation (not replacement) for clinicians. Metrics proposed are tangible but need transparent baselines and attribution to AI initiatives to be credible.

This strategy lands amid a broader administrative push to adopt AI across government and HHS’s own restructuring—where officials say there were hundreds of active or planned AI implementations and a significant expected rise in use cases this fiscal year. Prior testimony from RFK Jr. foreshadowed aggressive AI adoption and high-profile talent recruitment, as well as ambitions to compress clinical trial timelines using AI—ideas that make the new framework feel more like a codification than a fresh start

📈Risks and unanswered questions

- Data protection and consent: How OneHHS will technically segregate, anonymize, and govern health data—especially given cross-division sharing and potential private-sector collaborations—remains partially specified, prompting concerns from experts and observers.

- Bias, safety, and auditability: The strategy references “gold-standard science” and benchmarks, but external validation protocols, incident reporting, and model lifecycle governance (including high-impact AI) need clear public documentation to build trust.

- Scope creep and private sector integration: The plan “paves the way” for co-creation with industry; concrete rules for procurement, IP/data ownership, model evaluation, and conflict-of-interest management will define whether collaboration enhances outcomes without eroding privacy or public trust.

- Transparency and metrics: HHS says it will publish results and develop specific metrics per pillar; public-facing dashboards, methodologies, and independent review timelines will be crucial to avoid performance theater.

Yet questions loom. Analysts and advocates warn that centralizing data and accelerating rollouts demands ironclad governance, especially for the most sensitive data Americans have: their health records. They urge specifics on safeguards, auditability, and transparency—especially as public trust in HHS communications has been contested under current leadership. Without detailed protocols for risk management, external validation, private-sector integration, and public reporting, ambition risks outpacing accountability.

If HHS delivers on its governance promises, publishes measurable outcomes, and invites independent scrutiny, the OneHHS strategy could streamline operations, reduce burdens on staff, and accelerate research in ways that tangibly improve patient outcomes. The balance to strike is clear: move fast enough to unlock value, but with controls, consent, and clarity strong enough to earn public trust.

🥜Final Nut: Promises & Trust

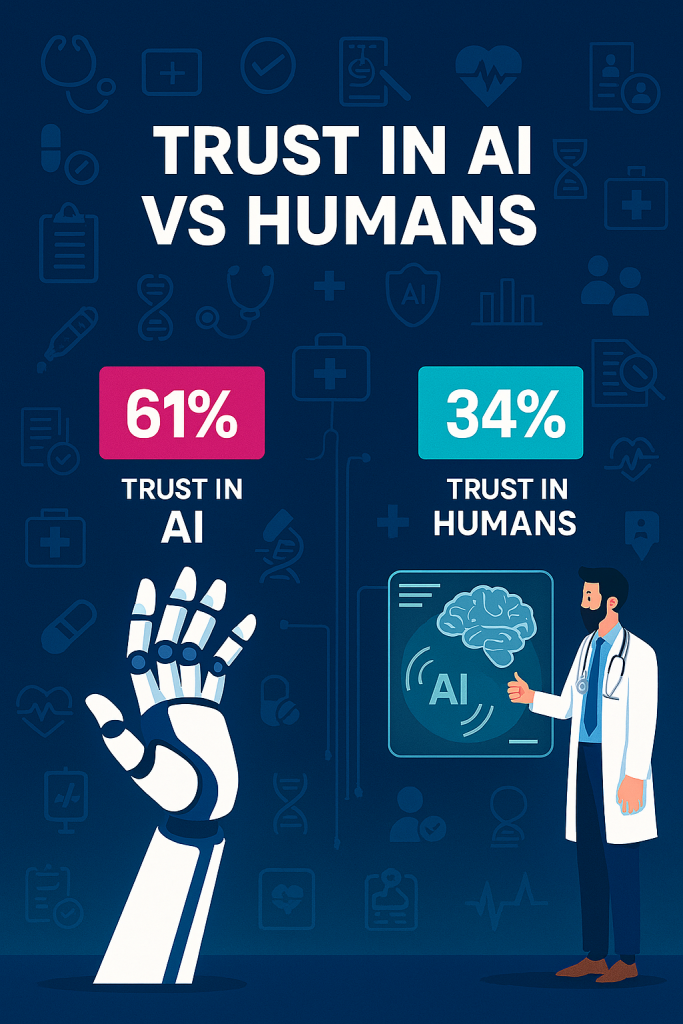

Public trust in medical institutions is at a historic low. The COVID-19 pandemic left scars not only in public health but in public perception—conflicting guidance, politicized messaging, and uneven enforcement of protocols eroded confidence in the very systems meant to safeguard lives. Many Americans now approach official health directives with skepticism, questioning whether decisions are driven by science, politics, or profit. This erosion of trust creates a fragile environment for any new initiative, especially one as transformative as AI in healthcare.

Yet paradoxically, AI may offer a pathway to rebuild confidence. Unlike human decision-makers, AI systems can be designed to operate with transparency, reproducibility, and data-driven rigor. Where human judgment is often clouded by bias, fatigue, or political pressure, AI can provide consistent outputs anchored in evidence. If paired with clear audit trails and independent validation, AI-driven diagnostics and policy recommendations could be perceived as more impartial than human voices—ironically flipping the narrative that machines are less trustworthy than people.

Contrary to popular fears of “black box” algorithms, many patients may come to trust AI precisely because it removes the human element they now doubt. Imagine a sepsis alert system that explains its reasoning step by step, or a maternal health predictor that shows the exact data patterns behind its recommendations. These systems don’t just deliver outcomes—they deliver receipts. In a climate where medical trust has been fractured, the promise of AI lies in its ability to be more transparent, more accountable, and ultimately more believable than the institutions that deploy it.

If HHS can harness this paradox—leveraging AI’s perceived neutrality while embedding strong governance and public-facing transparency—it may not only modernize operations but also repair the trust deficit left in the wake of COVID. The challenge is clear: AI must not become another opaque authority figure. Instead, it must be framed as a tool that democratizes insight, empowers patients, and proves that trust can be rebuilt not by rhetoric, but by reproducible results.

Any questions or concerns please comment below or Contact Us here.

📚 Curated Source List

- Official Announcement

- U.S. Department of Health and Human Services (HHS) press release, December 4, 2025 – unveiling the “OneHHS” AI strategy and its five pillars (governance, infrastructure, workforce, gold-standard science, care/public health modernization).

- Media Coverage & Analysis

- Nextgov/FCW – detailed coverage of the five-pillar structure and the “OneHHS AI-integrated Commons,” including risk-proportionate controls, inventories of use cases, and data-sharing governance.

- ExecutiveGov – summary of the pillars with emphasis on standardized minimum risk practices, shared computing/data resources, and workforce empowerment.

- Healthcare Dive – focus on internal efficiency, governance board creation, employee training, collaboration with private sector, and outcome metrics (hospital readmissions, sepsis mortality, ED revisits, maternal/infant outcomes).

- Federal News Network (Associated Press) – situates the strategy within the broader Trump administration AI adoption push, highlights “try-first” culture and department-wide ChatGPT access, while raising concerns about sensitive health data protection.

- Politico – earlier reporting on RFK Jr.’s testimony to Congress (May 2025) about aggressive AI implementation, recruitment of tech talent, and ambitions to shorten clinical trials.

- HealthLeaders – echoes the “do more with less” AI posture, emphasizing operational efficiency and data management.

- GovInfoSecurity – notes departmental overhaul under RFK Jr., citing 271 active/planned AI implementations in FY2024, 70% increase in FY2025, and governance board meetings.

- USA Today – broader reporting on RFK Jr.’s public health messaging posture, highlighting contested trust environment around HHS communications.

- “The Moltbook Threat Isn’t Sentient AI, It’s Infrastructure”

- Davos.exe: When the WEF Got Replaced by an AI Roadshow

- AI, WAR, AND THE QUIET ARRIVAL OF OUR TERMINATOR FUTURE

- Matthew McConaughey Just Drew the First Line in the AI Sand

- CRAIG Is Quietly Becoming the Most Influential AI Watchdog You’ve Never Heard Of

Leave a Reply